1. Datasets#

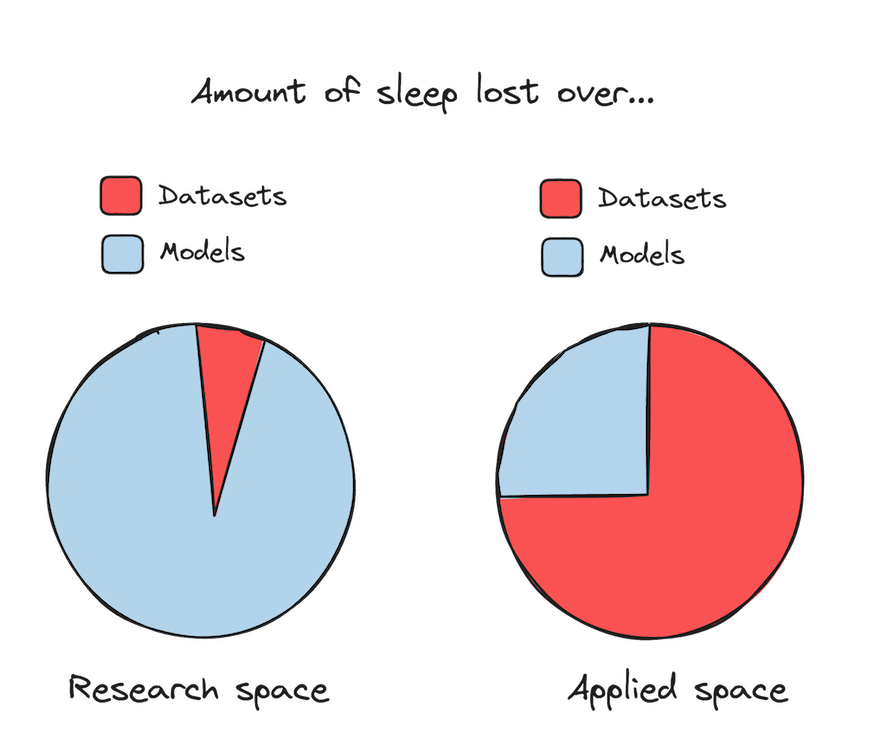

Many books and online tutorials start with a neat, static tabular dataset. However, when it comes to building predictive systems for lending business, we normally deal with data that’s far from perfect. In retail banking, it’s messy, unstructured, and constantly changing. The very first question people ask when they join a project is, “Where’s the dataset?” (also: “Is our training data the “right” data set?”).

The simple truth is there’s usually no readily available dataset. It goes without saying that it’s a practitioner’s job (not another person’s) to roll up their sleeves, collect the data, and get it in shape to solve the problem. Preparing a dataset often means sampling from all possible real-world data to create training data, and this is no trivial task as many things can go off track in the early stages of the project.

When developing a minimum viable dataset for modeling credit risk, you should be extremely motivated to understand how data works to validate the usefulness of your problem framing and your data. As a common saying goes, “garbage in - garbage out”: low-quality data, not subpar algorithmic performance, serves as a common source of risk assessment errors.

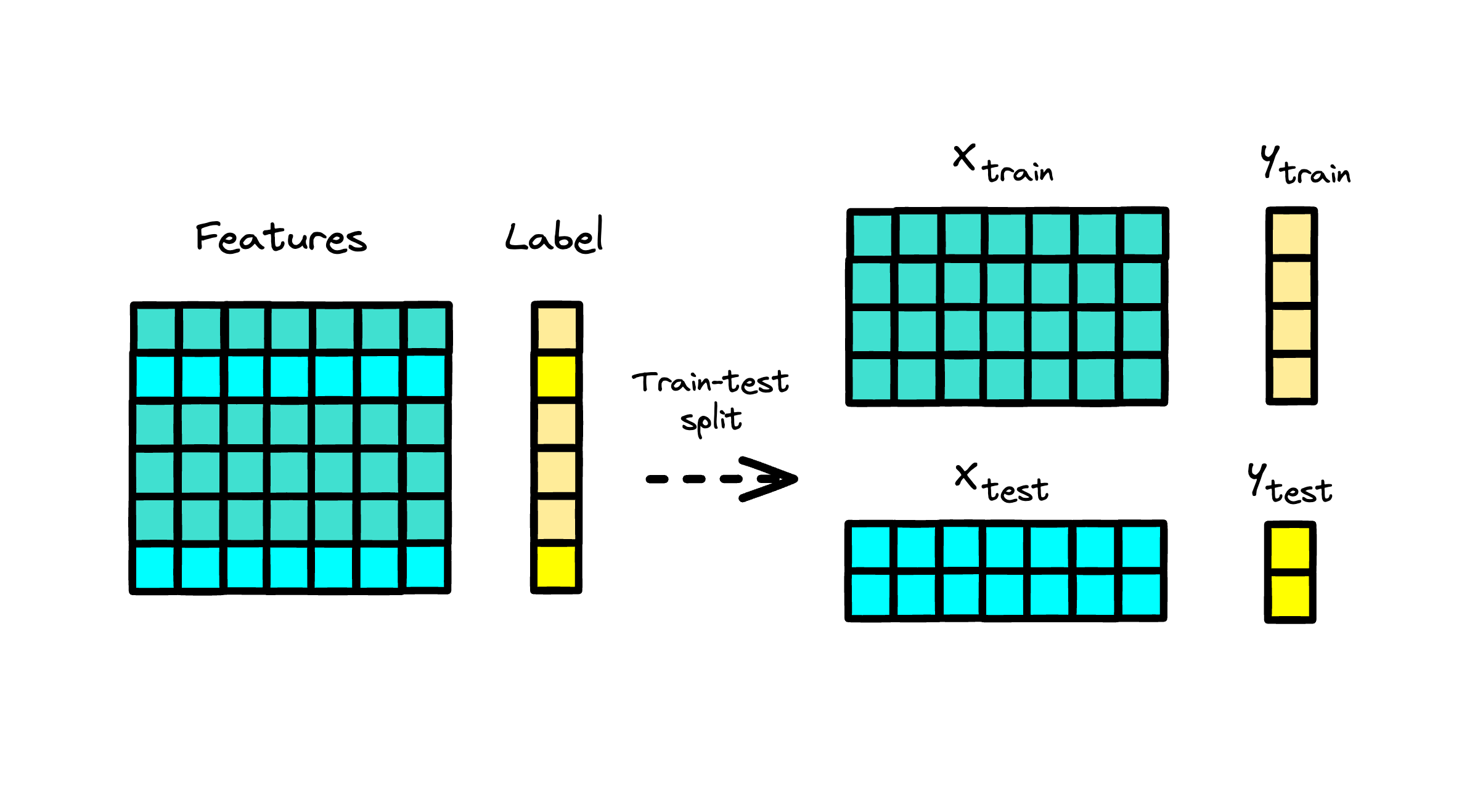

When preparing data for predictive modeling purposes, it’s essential to divide it into training and hold-out (test) sets. The training data is used to estimate a scoring function, while the hold-out data is reserved for monitoring performance on unseen data. Another way to create trainining and hold-out samples is to use a cut-off date to create an out-of-time testing data.

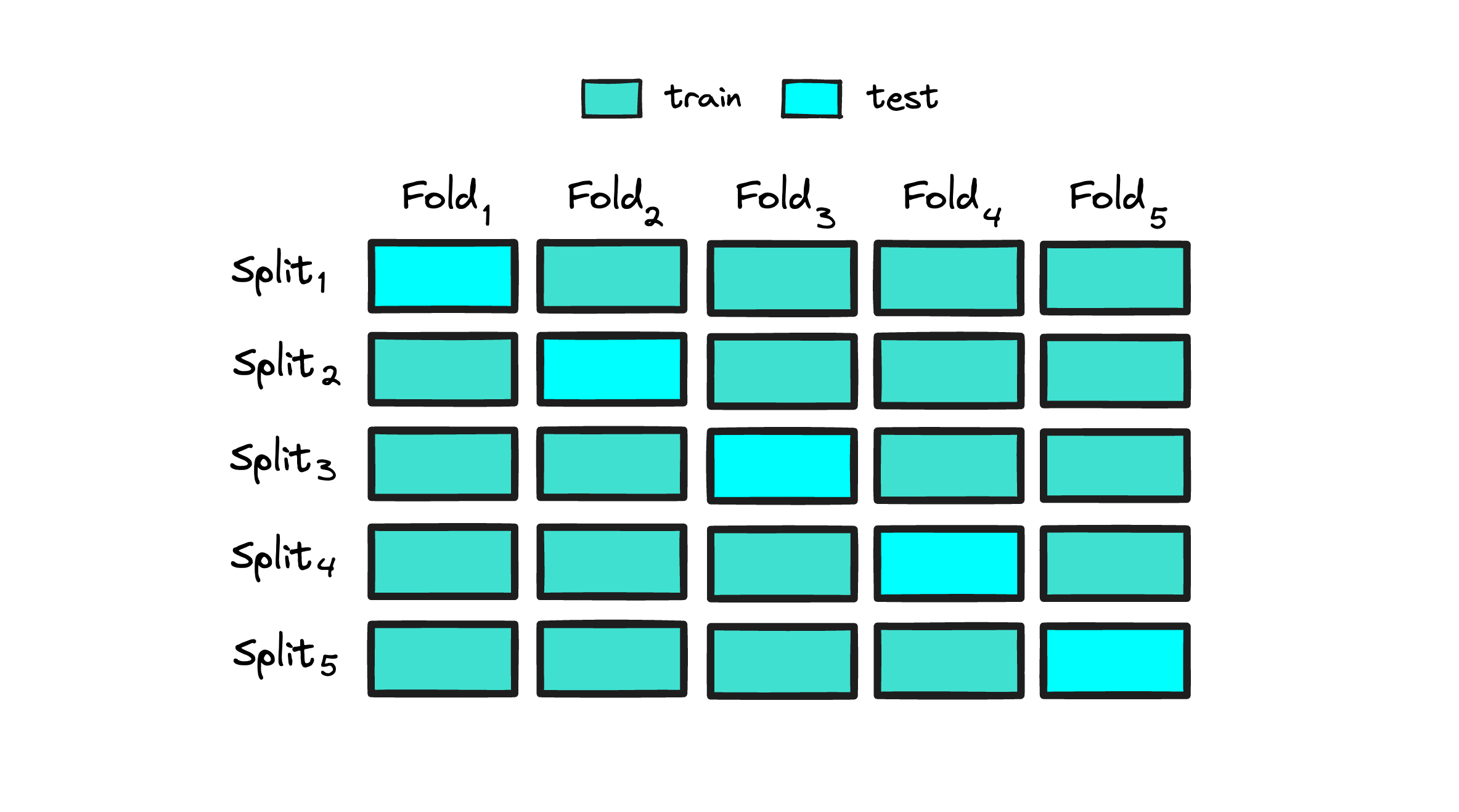

Another widely used approach is cross-validation, where the data is split into training and hold-out sets using a specified number of folds. This technique is valuable not only for model training but also for hyperparameter optimization. Cross-validation allows performance evaluation on each fold, and the results are averaged to find hyperparameters that enhance the model’s performance.

In the text that follows, you will discover resources for working with credit risk datasets and access links to publicly available credit risk data sources to get started. The aim here isn’t to delve deep into the technicalities of building default flags or performing hands-on feature engineering. Instead, the focus is to provide you with a foundational perspective on ML underwriting models that you can further expand through additional offline and online reading.

You will also find notebooks with processing dataframes using tools like Pandas and Polars, explore the basics of NumPy and a range of Python’s built-in functions that may be instrumental to modeling your domain of specialty.

... 1.1. Publicly Available Datasets#

Below you may find some known and not so well known real-world credit risk datasets that can be used for training and benchmarking performance of credit-risk models (in no particular order). Some of these datasets (in original or synthesized form) will be used throughout this guide.

1.1.1. Tabular Data#

-

For more information about the dataset, please see: AIX360 Credit Approval Tutorial.

Fannie Mae Single-Family Loan Performance Data - Fannie Mae

For more details about the datasets and their processing, please see: Loan Performance Data Tutorial.

-

For more details about the datasets and their processing, please see: Data Cleaning and Preparation for Machine Learning - dataquest.io.

1.1.2. Graph Data#

-

The German credit graph represents clients in a German bank connected with 10,000 nodes based on the similarity of their credit accounts. The task is to classify clients into good vs. bad credit risks, considering clients’ gender as the sensitive attribute.

The credit defaulter graph features 30,000 nodes representing individuals connected based on the similarity of their spending and payment patterns. The task is to predict whether an individual will default on the credit card payment or not while considering age as the sensitive attribute.

More resources to read

Explore additional resources and references for in-depth understanding of the topics covered in this section.

How (and Why) to Create a Good Validation Set

Combining Great Expectations with Fabric: Create Better ML datasets

Compare the Effect of Different Scalers on Data with Outliers

Imputing Missing Values Before Building an Estimator

Explaining Predictive Model Performance: An Experimental Study of Data Preparation and Model Choice