3. Metrics#

This chapter delves into the world of credit risk metrics. It explores a range of performance measures, with a primary focus on rank-ordering metrics ubiqitously used in credit risk analysis. Additionally, it reviews proper scoring rules, including the Brier score (mean squared error) and Log Loss, which are widely used in assessing binary classification model performance as well as a non-parametric method of Expected Calibration Error (ECE).

3.1. Discrimination#

The rank-ordering ability of credit risk models at separating good risk from bad risk has revolutionized financial services and lending in particular. When looking at the performance of models, practitioners usually rely on either the Gini score or AUC score in evaluating discriminatory power.

To understand how statistical discrimination works, ROC curve is instrumental. All of our observations are sorted decreasing by score (for example, predicted probability) and then at each unique threshold we evaluate the number of true positives and false positives. This approach to evaluating TPRs and FPRs at various classification thresholds allows to use this metric for imbalanced data.

Improved class separation is attained by achieving enhancements along the ROC curve, particularly through the presence of larger concave segments, which ultimately result in a higher AUC (Area Under the Curve) score.

ROC Curve and AUC score is less sensitive to the class imbalance problem unlike Precision-Recall and other confusion-matrix based metrics (e.g.,Accuracy, F1 score). This makes AUC score a good discrimination metric for credit risk where the numbers of defaulters are in general relatively low compared to non-defaulters.

The notebook provides practical examples and insights into the following rank-ordering metrics, essential for credit risk analysis:

ROC Curve: The Receiver Operating Characteristic (ROC) curve is a powerful tool for visualizing the performance of binary classifiers as the discrimination threshold varies.

Gini Score: The Gini score is a metric used to assess the discriminatory power of a model. The Gini score can be derived from AUC score (area under the ROC curve) as

Gini=AUC*2-1.Profit Curve: The profit curve is based on a constant cost-benefit matrix applied to a confusion matrix produced for each unique ROC Curve segment. It helps to evaluate the profitability of a model’s predictions in evaluating the benefits of model use.

Somers’ D Score: Somers’ D is a rank correlation coefficient used to measure the strength and direction of association between ordinal variables, making it particularly relevant in credit risk analysis.

Cumulative LGD Accuracy Ratio (CLAR): CLAR is a metric designed to assess the performance of credit risk models, considering loss given default (LGD) predictions.

More resources to read

Explore additional resources and references for in-depth understanding of the topics covered in this section.

Why is Accuracy Not the Best Measure for Assessing Classification Models?

Damage Caused by Classification Accuracy and Other Discontinuous Improper Accuracy Scoring Rules

Beyond Accuracy: Measures for Assessing Machine Learning Models, Pitfalls and Guidelines

Get the Best From Your Scikit-Learn Classifier

ROC Curves for Continuous Data

User Churn Prediction: A Machine Learning Example

Machine Learning with SAP HANA & R – Evaluate the Business Value

Animations with Receiver Operating Characteristic and Precision-Recall Curves

3.2. Calibration#

Model calibration is a fundamental yet often overlooked concept in predictive modeling, which is however not so much the case for credit risk models. This is so because a miscalibrated model may result in adverse selection of credit risk (failure of scores). Customers who received an inaccurate probability of default may be less likely to pay back than intended.

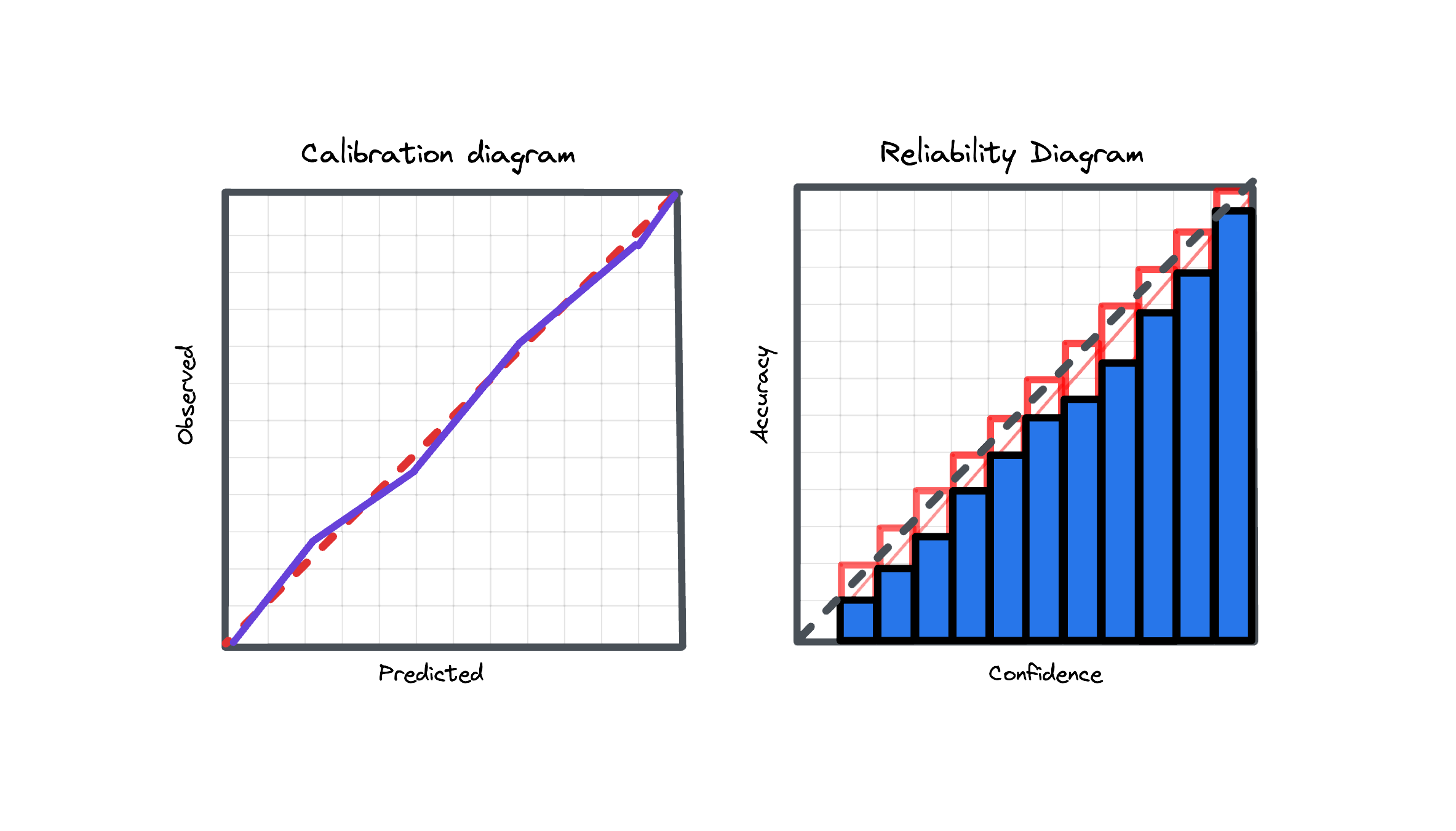

To understand calibration better, consider a scenario where a model predicts a default event with a 5% probability. This prediction suggests that, statistically, when the model makes such predictions multiple times, it should align with the actual outcome 5% of the time. If instead of 5% we actually observe 10% this means the model is miscalibrated, and several options exist to improve this including parametric and non-parametric approaches.

Model calibration is a critical property for predictive systems, ensuring that their output probabilities are reliable and aligned with actual occurrences for risk assessments.

This chapter explores some calibration metrics, which help determine how well a model’s predicted probabilities align with the actual outcomes:

Brier Score: The Brier score, equivalent to the mean squared error, is a proper scoring rule used to assess the accuracy of probabilistic predictions and can be decomposed into calibration and refinement loss.

Log Loss: Log Loss, also referred to as Logarithmic Loss or Cross-Entropy Loss, serves as a widely-used evaluation metric for binary classification models. Log Loss essentially measures how closely the predicted probability aligns with the corresponding ground truth (0 or 1, typical for binary classification tasks). A lower Log Loss indicates superior model performance.

Expected Calibration Error (ECE): Expected Calibration Error measures calibration in terms of weighted absolute differences between predicted probabilities and observed labels within bins. As per original paper, 10 equal width bins are used, however this analysis can be performed also on ROC segment level.

More resources to read

Explore additional resources and references for in-depth understanding of the topics covered in this section.

On Calibration of Modern Neural Networks

Why Model Calibration Matters and How to Achieve It